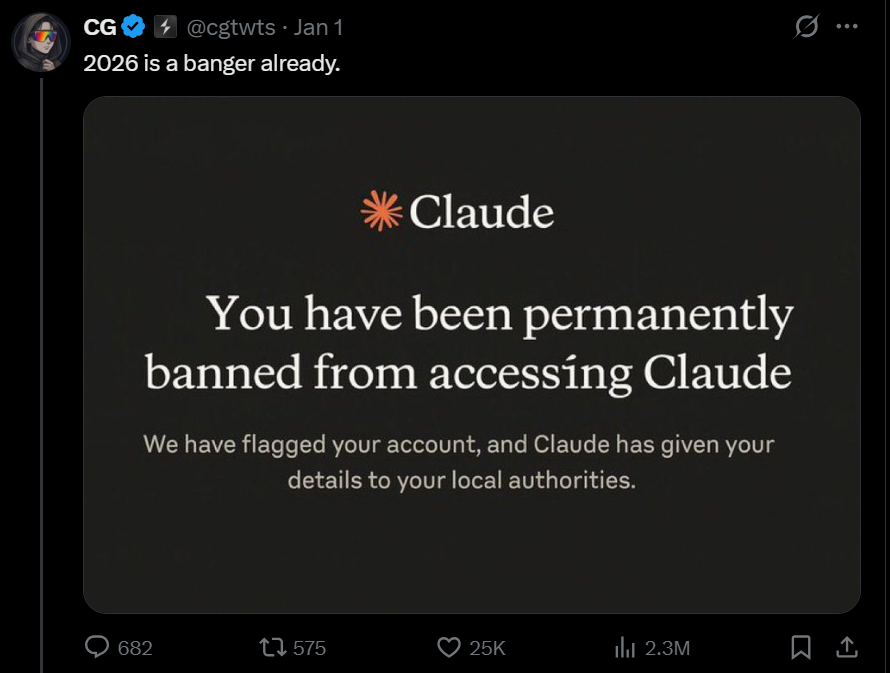

Anthropic has denied reports of banning legitimate accounts after a viral post on X claimed the creator of Claude had banned a user.

Claude Code is one of the most capable AI coding agents right now, and it’s also widely used compared to tools like Gemini CLI or Codex.

With that popularity comes trolling and plenty of fake screenshots that get shared as “proof” of bans.

In the viral post on X, the user shared a screenshot that claims Claude permanently banned the account and shared details with the local authorities.

The message is designed to look scary, but Anthropic says it does not match anything Claude actually shows users.

In a statement shared with BleepingComputer, Anthropic said the image isn’t real and that the company does not use that language or display that kind of message.

The company added that the screenshot appears to be a fake that “circulates every few months” and is inaccurate.

That does not mean Claude users cannot get restricted.

Anthropic, like other AI companies, enforces strict rules to prevent misuse of AI systems.

Accounts can face limitations if they repeatedly violate policies, including attempts to use AI agents for illegal activities, such as weapons-related requests.

Secrets Security Cheat Sheet: From Sprawl to Control

Whether you’re cleaning up old keys or setting guardrails for AI-generated code, this guide helps your team build securely from the start.

Get the cheat sheet and take the guesswork out of secrets management.

Related Articles:

Anthropic claims of Claude AI-automated cyberattacks met with doubt

ChatGPT tests a new feature to find jobs, improve your resume, and more

Hackers target misconfigured proxies to access paid LLM services

OpenAI is rolling out GPT-5.2 “Codex-Max” for some users

Gmail’s new AI Inbox uses Gemini, but Google says it won’t train AI on user emails